Despite the considerable attention on generative AI, many of its current uses across security operations have taken the form of chatbots, which are helpful, but don’t significantly reduce the operational burden on analysts.

Instead of limiting AI capabilities to chatbot functionalities, security operations should leverage autonomous AI agents, known as agentic AI. These agents represent a significant leap forward in the capabilities of AI with their ability to make independent decisions. In this blog, we’ll detail how an AI agent can take generative AI a step further.

What are Security AI Agents?

An agentic AI, or AI Agent, is a technology that can understand and solve problems without human intervention, as well as continuously self-learn and improve. Unlike Generative AI that typically just summarizes events and alerts, AI agents are designed to think, act, and learn, making real-time decisions to address security incidents.

Generative AI is Just One Tool of Agentic AI

While AI chatbots simplify initial data collection by giving quick access to information through direct prompts and queries, they still leave the analyzing and decision- making to analysts. Their tendency to “hallucinate,” or generate incorrect information, can lead to serious security oversights, making them difficult to fully rely on in critical security environments.

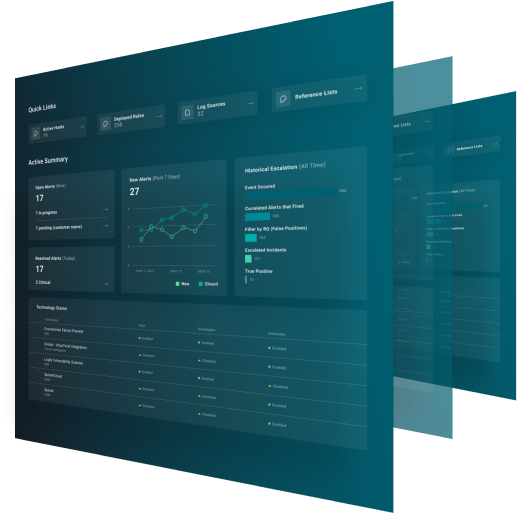

Security operations need to take AI further. In our previous blog in this series, we introduced the ReliaQuest approach to agentic AI through the GreyMatter AI Agent. AI agents can autonomously set goals, make decisions, and take action without constant human intervention. They can also continuously learn and self-improve, adapting to the unique environment of each organization. Ultimately, they enables organizations to contain threats in minutes, reduce the workload of Tier 1 and Tier 2 tasks, and achieve real-time, contextual outcomes.

To provide these outcomes to organizations, the GreyMatter AI Agent leverages whichever advanced technologies and security tools necessary, including:

- Generative AI: While the AI Agent is more sophisticated than generative AI chatbots, it does leverage generative AI through use of AI tools. These AI tools serve as building blocks used to accomplish specific tasks within the AI agent’s workflow.

- Existing security tools: By using data sources in a customer’s environment, the AI agent can gather relevant data and tailor investigations and responses to the specific context of each environment, enhancing accuracy and effectiveness.

- Threat intelligence: Integrating real-time threat intelligence enables the AI agent to seek additional context needed such as indicators of compromise (IoCs) or the tactics, techniques, and procedures (TTPs) of threat actors.

Each of these tools are used throughout the GreyMatter AI Agent’s workflow to effectively investigate, contain, and remediate threats.

The AI Agent Toolbox for Phishing, Malware, and Credential Compromise

Using AI tools, AI Agents can handle entire end-to-end workflows with little to no human input. Below, we describe how agentic AI utilizes these different tools during phishing, malware, and compromised-credential attacks.

Phishing

- Reported Emails: The AI Agent can automatically analyze, categorize, and respond based on the specific email, but it will use a generative AI tool to do certain aspects, such as retrieve data from security tools like a SIEM, to then complete initial triage, and basic analysis of reported emails.

- Quarantined Emails: By leveraging a Secure Email Gateway (SEG) security tool, the AI Agent can capture quarantined emails, as well as use an intel enrichment AI tool to check if IP addresses, URLs, and attachments in the emails are malicious.

Malware

- Decoding Malware Scripts: The AI Agent can automatically analyze, decode, and categorize malware scripts, but leverage an intel enrichment tool to identify known malicious code patterns, as well as an AI tool to retrieve relevant information, perform contextual queries, and flag potential threats for further analysis.

- Malware Analysis: The AI Agent can automatically perform in-depth malware analysis. It will use threat intelligence feeds to check if file hashes, behaviors, and signatures match known malware and employ generative AI tools to conduct behavioral analysis, retrieve relevant data, and generate detailed reports, leaving the final assessment and response actions to the analyst.

Compromised Credentials

- Credential Stuffing Attacks: The AI Agent can leverage threat intelligence feeds to identify known compromised credentials and uses generative AI tools integrated with WAFs (Web Application Firewalls) to monitor login attempts across web applications.

The examples above show how the GreyMatter AI Agent utilizes various tools for just parts of common attacks. Next, we’ll provide a detailed example of a complete workflow for a specific attack type, password spraying.

Real-World Use Case for AI Agents: Password Spraying Attack

A password-spraying attack is a type of brute-force attack where an attacker attempts to gain unauthorized access by trying common passwords across many different usernames. This method avoids triggering account lockouts by spreading out the login attempts, making it harder to detect.

If this type of attack is detected, AI Agent starts by developing a plan to investigate it. Here’s the step-by-step breakdown of the plan and the specific generative AI tools it uses:

1. Identifies the Artifacts: The AI Agent utilizes an AI enrichment tool to gather additional information. This includes:

- Determining if the host has been involved in previous incidents or has any notable attributes

- Checking if user accounts have been involved in previous incidents or have any notable attributes.

2. Enriches Alerts: An AI search tool queries logs related to the event to collect relevant telemetry and understand the context of the failed login attempts and identify patterns.

3. Gathers Threat Intelligence: An IOC enrichment AI tool is used to gather information from threat intelligence tools. This includes verifying if the IP address is associated with known malicious activities or has been flagged in threat lists, ensuring the analysis is based on the most current threat intel available.

4. Analyzes Related Incidents: Historical context is reviewed using an AI tool that analyzes related incidents to identify whether they have been previously classified as true positives or false positives.

5. Makes a determination: After analyzing the data, the AI Agent reviews the results to determine the nature of the incident. If the evidence suggests a true positive, the AI Agent proceeds with the incident response plan. If it’s a false positive, the findings are documented.

While chatbots serve as useful tools for initial data collection, Agentic AI, with its autonomous decision-making and ability to leverage a robust toolkit, provides the depth and agility necessary to effectively manage and mitigate threats, such as the password spraying attack example above. By moving beyond basic chatbot functionalities, organizations can harness the full power of AI to not only lighten the load on security analysts but also achieve faster, more accurate threat responses.

Next in Our Series: Evaluating AI Technologies

Autonomous AI agents like the GreyMatter AI Agent offer a more advanced and effective approach to security operations. However, it’s crucial to consider several key factors when adopting AI in any form.

In the next blog of this series, we will explore essential questions organizations should ask providers when adopting AI. We’ll cover critical areas such as the data used to train the AI system, quality control measures, and data security.